We value your privacy

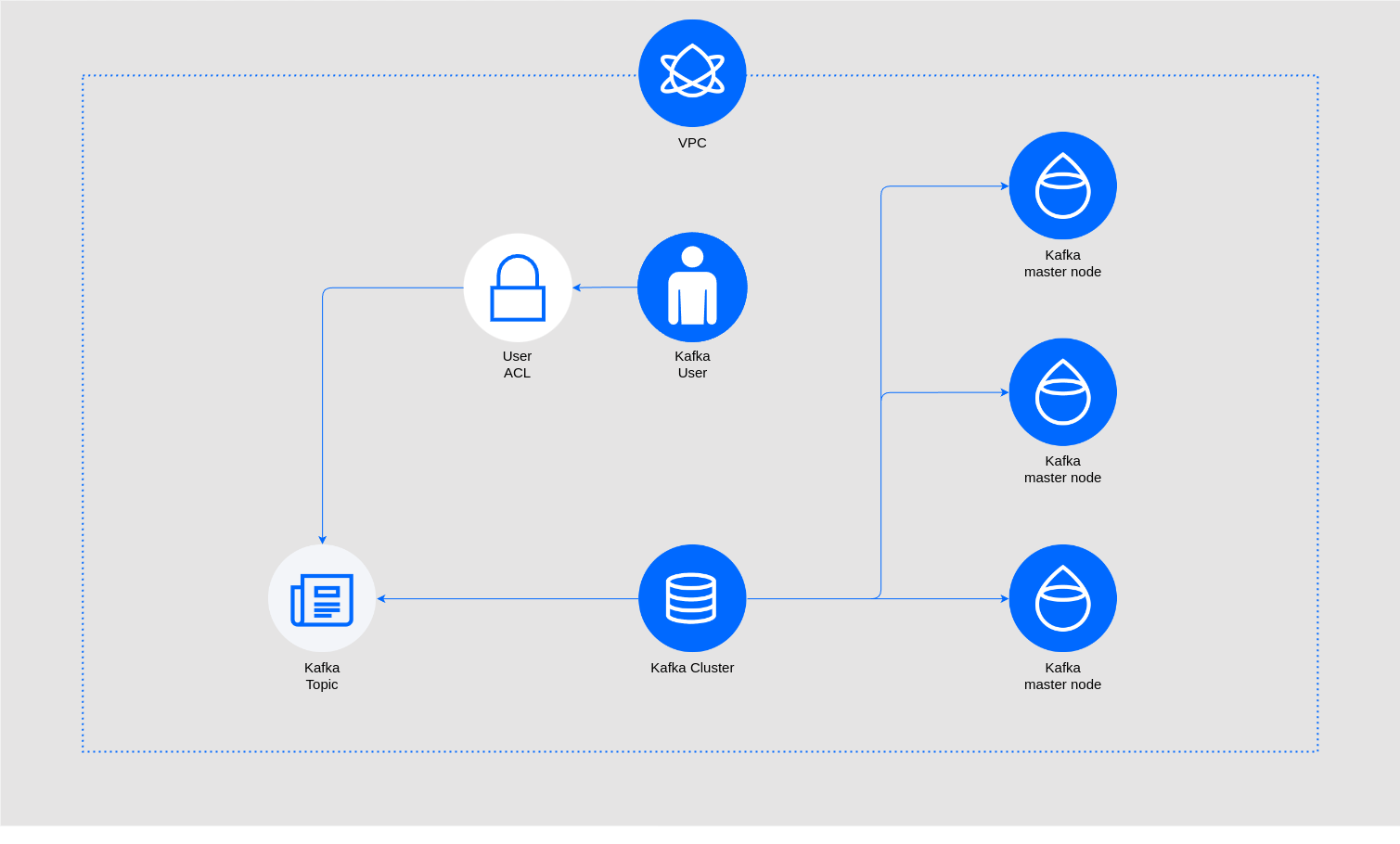

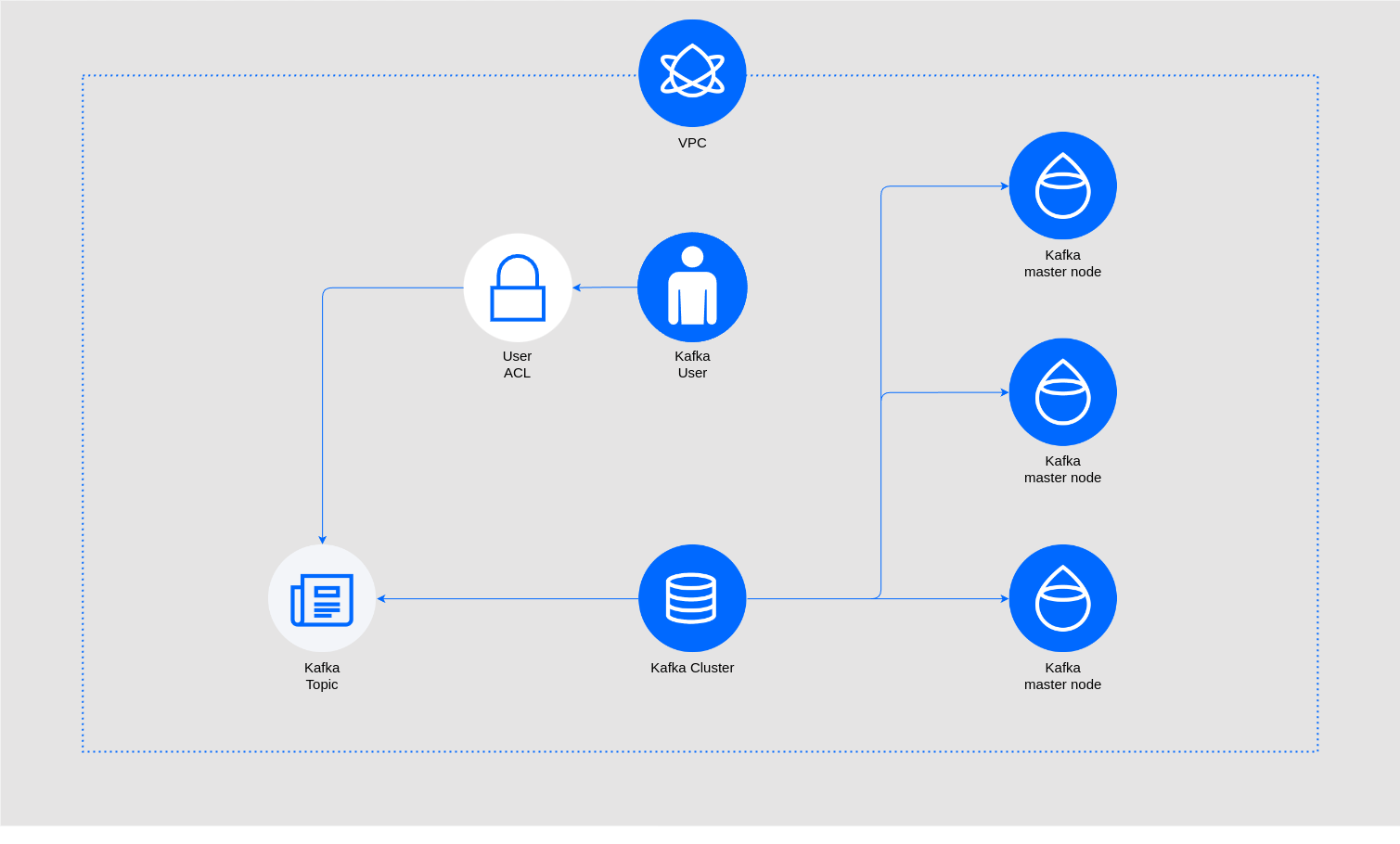

This module creates and manages a Kafka cluster in DigitalOcean, providing capabilities for managing topics, users, and their permissions

Once you have a Corewide Solutions Portal account, this one-time action will use your browser session to retrieve credentials:

shellterraform login solutions.corewide.com

Initialize mandatory providers:

Copy and paste into your Terraform configuration and insert the variables:

hclmodule "tf_do_kafka" {

source = "solutions.corewide.com/digitalocean/tf-do-kafka/digitalocean"

version = "~> 1.1.0"

# specify module inputs here or try one of the examples below

...

}

Initialize the setup:

shellterraform init

Corewide DevOps team strictly follows Semantic Versioning

Specification

to

provide our clients with products that have predictable upgrades between versions. We

recommend

pinning

patch versions of our modules using pessimistic

constraint operator (~>) to prevent breaking changes during upgrades.

To get new features during the upgrades (without breaking compatibility), use

~> 1.1 and run

terraform init -upgrade

For the safest setup, use strict pinning with version = "1.1.0"

This module creates and manages a Kafka cluster in DigitalOcean, providing capabilities for managing topics, users, and their permissions

All notable changes to this project are documented here.

The format is based on Keep a Changelog, and this project adheres to Semantic Versioning.

3.8trusted_sources variableFirst stable version

Minimal Kafka setup creates 3-node Kafka cluster with db-s-2vcpu-2gb machine type (by default, privileged doadmin user is created):

hclmodule "kafka" {

source = "solutions.corewide.com/digitalocean/tf-do-kafka/digitalocean"

version = "~> 1.1"

region = "fra1"

name_prefix = "develop"

}

Full Kafka setup creates 3-node Kafka cluster on specified VPC with db-s-2vcpu-2gb machine type, 40 GB storage size, myuser_topic topic, myuser user with admin permissions to access the topic, adds trusted sources, and tags the cluster as dev:

hclmodule "db" {

source = "solutions.corewide.com/digitalocean/tf-do-kafka/digitalocean"

version = "~> 1.1"

region = "fra1"

name_prefix = "develop"

vpc_id = "xxxxxxxx-yyyy-zzzz-xxxx-yyyyyyyyyyyy"

engine_version = "3.7"

node_count = 3

machine_size = "db-s-2vcpu-2gb"

machine_storage_size = 40

maintenance_window = {

day = "Tue"

hour = 15

}

kafka_topics = {

myuser_topic = {

partition_count = 3

replication_factor = 2

cleanup_policy = "compact"

compression_type = "uncompressed"

delete_retention_ms = 14000

file_delete_delay_ms = 170000

flush_messages = 92233

flush_ms = 92233720368

index_interval_bytes = 40962

max_compaction_lag_ms = 9223372036854775807

max_message_bytes = 1048588

message_down_conversion_enable = true

message_format_version = "3.0-IV1"

message_timestamp_difference_max_ms = 9223372036854775807

message_timestamp_type = "log_append_time"

min_cleanable_dirty_ratio = 0.5

min_compaction_lag_ms = 20000

min_insync_replicas = 2

preallocate = false

retention_bytes = -1

retention_ms = -1

segment_bytes = 209715200

segment_index_bytes = 10485760

segment_jitter_ms = 0

segment_ms = 604800000

}

}

users = {

myuser = {

acls = [

{

topic = "myuser_topic"

permission = "admin"

},

]

}

}

trusted_sources = [

{

type = "ip_addr"

value = "10.114.32.24"

},

{

type = "k8s"

value = "123ab45-67c8-de9f0-1ghj23"

},

]

tags = [

"dev",

]

}

| Variable | Description | Type | Default | Required | Sensitive |

|---|---|---|---|---|---|

name_prefix |

Name prefix for the created resources | string |

yes | no | |

region |

DigitalOcean region to create resources in | string |

yes | no | |

users[<key>] |

Name of user name to create | string |

yes | no | |

engine_version |

Kafka engine version | string |

3.7 |

no | no |

machine_size |

Database Droplet size associated with Kafka cluster | string |

db-s-2vcpu-2gb |

no | no |

machine_storage_size |

Kafka node storage size (Gb) | number |

40 |

no | no |

maintenance_window |

Maintenance window configuration | object |

{} |

no | no |

maintenance_window.day |

The day of the week (Mon, Tue, etc.) the maintenance window occurs |

string |

Mon |

no | no |

maintenance_window.hour |

The hour when the maintenance updates are applied, in UTC 24-hour format. Examples: 2, 3, 23 |

number |

2 |

no | no |

node_count |

Number of Kafka cluster nodes | number |

3 |

no | no |

tags |

List of tags to be assigned to the cluster resource | list(string) |

[] |

no | no |

topics |

List of Kafka topics to create inside the cluster. Can be set for kafka engine |

list(object) |

[] |

no | no |

topics.config |

Map containing Kafka topic configuration parameters | object |

yes | no | |

topics.config.cleanup_policy |

The topic cleanup policy that describes whether messages should be deleted, compacted, or both when retention policies are violated. Supported values are delete, compact, or compact_delete |

string |

compact |

no | no |

topics.config.compression_type |

The topic compression codecs used for a given topic. This may be one of uncompressed, gzip, snappy, lz4, producer, zstd |

string |

uncompressed |

no | no |

topics.config.delete_retention_ms |

The amount of time, in ms, that deleted records are retained | number |

14000 |

no | no |

topics.config.file_delete_delay_ms |

The amount of time, in ms, to wait before deleting a topic log segment from the filesystem | number |

170000 |

no | no |

topics.config.flush_messages |

The number of messages accumulated on a topic partition before they are flushed to disk | number |

92233 |

no | no |

topics.config.flush_ms |

The maximum time, in ms, that a topic is kept in memory before being flushed to disk | number |

92233720368 |

no | no |

topics.config.index_interval_bytes |

The interval, in bytes, in which entries are added to the offset index | number |

40962 |

no | no |

topics.config.max_compaction_lag_ms |

The maximum time, in ms, that a particular message will remain uncompacted | number |

9223372036854775807 |

no | no |

topics.config.max_message_bytes |

The maximum size, in bytes, of a message | number |

1048588 |

no | no |

topics.config.message_down_conversion_enable |

Determines whether down-conversion of message formats for consumers is enabled | bool |

true |

no | no |

topics.config.message_format_version |

The version of the inter-broker protocol that will be used | string |

3.0-iv1 |

no | no |

topics.config.message_timestamp_difference_max_ms |

The maximum difference, in ms, between the timestamp specific in a message and when the broker receives the message | string |

9223372036854775807 |

no | no |

topics.config.message_timestamp_type |

Specifies which timestamp to use for the message. This may be one of create_time or log_append_time |

string |

log_append_time |

no | no |

topics.config.min_cleanable_dirty_ratio |

A scale between 0.0 and 1.0 which controls the frequency of the compactor |

number |

0.5 |

no | no |

topics.config.min_compaction_lag_ms |

The minimum time, in ms, that a particular message will remain uncompacted | number |

20000 |

no | no |

topics.config.min_insync_replicas |

The number of replicas that must acknowledge write before it is considered successful. -1 is a special setting to indicate that all nodes must acknowledge a message before write is considered successful |

number |

1 |

no | no |

topics.config.preallocate |

Determines whether to preallocate a file on disk when creating a new log segment within a topic | bool |

false |

no | no |

topics.config.retention_bytes |

The maximum size, in bytes, of a topic before messages are deleted. -1 is a special setting indicating that this setting has no limit |

number |

-1 |

no | no |

topics.config.retention_ms |

The maximum time, in ms, that a topic log file is retained before deleting it. -1 is a special setting indicating that this setting has no limit |

number |

-1 |

no | no |

topics.config.segment_bytes |

The maximum size, in bytes, of a single topic log file | number |

209715200 |

no | no |

topics.config.segment_index_bytes |

The maximum size, in bytes, of the offset index | number |

10485760 |

no | no |

topics.config.segment_jitter_ms |

The maximum time, in ms, subtracted from the scheduled segment disk flush time to avoid the thundering herd problem for segment flushing | number |

0 |

no | no |

topics.config.segment_ms |

The maximum time, in ms, before the topic log will flush to disk | number |

604800000 |

no | no |

topics[*].name |

Name of Kafka topic | string |

yes | no | |

topics[*].partition_count |

The number of partitions for the topic | number |

3 |

no | no |

topics[*].replication_factor |

The number of nodes that topics are replicated across | number |

2 |

no | no |

trusted_sources |

List of trusted sources to restrict connections to the database cluster | list(object) |

[] |

no | no |

trusted_sources[*].type |

Type of resource that the firewall rule allows to access the database cluster. Possible values are: droplet, k8s, ip_addr, tag, or app |

string |

yes | no | |

trusted_sources[*].value |

ID of the specific resource, name of a tag applied to a group of resources, or IP address that the firewall rule allows to access the database cluster | string |

yes | no | |

users |

Map of users, where key is a user name and value is parameters object | map(object) |

{} |

no | no |

users[<key>].acls |

A list of ACLs (Access Control Lists) specifying permission on topics within the Kafka cluster. Applicable and required if engine is set to kafka |

list(object) |

no | no | |

users[<key>].acls[*].permission |

The permission level applied to the ACL. This parameter supports the following values: admin, consume, produce, and produceconsume |

string |

admin |

no | no |

users[<key>].acls[*].topic |

A regex for matching the topic(s) that this ACL should apply to | string |

yes | no | |

vpc_id |

The ID of the VPC where the Kafka cluster will be located. If not provided, default DigitalOcean VPC is used | string |

no | no |

| Output | Description | Type | Sensitive |

|---|---|---|---|

database_cluster |

Cluster resource attributes | resource |

yes |

topics |

Kafka Topic resources' attributes | computed |

no |

users |

User resources attributes | computed |

yes |

| Dependency | Version | Kind |

|---|---|---|

terraform |

>= 1.3 |

CLI |

digitalocean/digitalocean |

~> 2.34 |

provider |