We value your privacy

The module creates a managed Kubernetes cluster (GKE) in GCP.

Supported Kubernetes versions are 1.32 and newer.

By default, multi-zonal cluster is configured but it's also possible to configure cluster in a single zone of a region. See location parameter reference.

Default module configuration ensures the GKE cluster is created with enhanced security features enabled:

Once you have a Corewide Solutions Portal account, this one-time action will use your browser session to retrieve credentials:

shellterraform login solutions.corewide.com

Initialize mandatory providers:

Copy and paste into your Terraform configuration and insert the variables:

hclmodule "tf_gcp_k8s_gke" {

source = "solutions.corewide.com/google-cloud/tf-gcp-k8s-gke/google"

version = "~> 5.1.1"

# specify module inputs here or try one of the examples below

...

}

Initialize the setup:

shellterraform init

Corewide DevOps team strictly follows Semantic Versioning

Specification

to

provide our clients with products that have predictable upgrades between versions. We

recommend

pinning

patch versions of our modules using pessimistic

constraint operator (~>) to prevent breaking changes during upgrades.

To get new features during the upgrades (without breaking compatibility), use

~> 5.1 and run

terraform init -upgrade

For the safest setup, use strict pinning with version = "5.1.1"

The module creates a managed Kubernetes cluster (GKE) in GCP.

Supported Kubernetes versions are 1.32 and newer.

By default, multi-zonal cluster is configured but it's also possible to configure cluster in a single zone of a region. See location parameter reference.

Default module configuration ensures the GKE cluster is created with enhanced security features enabled:

All notable changes to this project are documented here.

The format is based on Keep a Changelog, and this project adheres to Semantic Versioning.

node_pools[*].name inputauto_upgrade variable to switch node auto-upgrade feature, default value truemaintenance_window variable to configure cluster maintenance policymaintenance_exclusion variable to configure exceptions to the cluster maintenance window policydns_endpoint_enabled variable to toggle control plane DNS endpoint (disabled by default)public_endpoint_enabled variable to toggle control plane public endpoint (enabled by default)block_project_ssh_keys variable default value to truesecure_boot_enabled variable default value to truesecrets_encryption.enabled variable default value to truenetwork_policies_enabled variable default value to truegoogle and google-beta providers version constraints from ~> 6.2 to ~> 6.27cluster_ipv4_cidr_block variable to configure the IP address range for the cluster pod IPsvpc_native variable not switching networking modenetwork_policies_enabled variable to toggle the network policies support in the cluster (disabled by default)block_project_ssh_keys variable to toggle project-level SSH keys blocking for cluster nodes (disabled by default)shielded_nodes_enabled variable to toggle shielded nodes for cluster (enabled by default)secure_boot_enabled variable to toggle secure boot for cluster nodes (disabled by default)binary_auth_enabled variable to toggle binary authorization for cluster (disabled by default)secrets_encryption variable to toggle Kubernetes secrets encryption with KMS key (disabled by default)1.32gateway_api_config_channel variable, which defines the configuration options for API Gateway channelBREAKING CHANGE: all the node pools will have the unique suffix appended to their name. To incorporate the suffixes for the node pool names, node pool redeployment is needed. Please, see the Upgrade notes section.

default_node_pool variable which defines the configurational options for the maintenance node pool that is created unconditionally<cluster_name>/node_pool_name label to all node poolscreate_before_destroy lifecycle option to node poolsname by adding a random suffixvpc_native and cluster_private_nodes_enabled property values to trueenabledobservability variable which allows the user to configure Google-managed cluster monitoring and deploy Google Cloud Managed Service for Prometheus (GMP) as GKE add-ondeletion_protection_enabled variable which allows the user to destroy the cluster, by default, is true. Set to false to destroy the clusternullable parameter to all variablescluster_version to 1.29google and google-beta providers' version to 6.2auto_upgrade option to true if STABLE release channel is selected (required by provider)(Last version compatible with Terraform Google v4)

min_master_version parameter to google_container_cluster resourcecluster_version to 1.27node_pools.image to COS_CONTAINERDcluster_version to 1.23vpc_native variable, to fix absence of IP aliasingcluster_master_cidr variable, to fix unrestricted inter-cluster communicationallowed_mgmt_networks, cluster_private_nodes_enabled variables, to fix unrestricted access to clusterBREAKING CHANGE: now all node pools have corresponding names in the state instead of abstract indexes which aren't compatible with a new version. Upgrade from an older version is possible with manual changes, see Upgrade Notes section

for_each meta-argument instead of count. With this change, it is now possible to store and reference Node Pool resources using their corresponding names instead of abstract indexestags parameter to identify valid sources or targets for network firewalls1.20+v1.x to v2.xModule from v2.0 has changed handling of node pool copies from count meta-argument to for_each, which isn't compatible with an old version. After the module version is upgraded, re-init module and update naming in Terraform state of managed indexed resources:

As an example, node_pools variable contains two node pools, where:

node_pools[0].name = "maintenance"node_pools[1].name = "app"Then, you need to move their state this way:

bashterraform state mv 'module.gke.google_container_node_pool.gke[0]' 'module.gke.google_container_node_pool.gke["maintenance"]'

terraform state mv 'module.gke.google_container_node_pool.gke[1]' 'module.gke.google_container_node_pool.gke["app"]'

v2.x to v3.xModule from v3.0 has changed Google providers version which isn't compatible with an old version. After the module version is upgraded, re-init module to upgrade Google providers version.

Upgrade Google providers version on project level to ~> 6.2:

hclrequired_providers {

google = {

source = "hashicorp/google"

version = "~> 6.2"

}

google-beta = {

source = "hashicorp/google-beta"

version = "~> 6.2"

}

}

Upgrade project dependencies:

bashterraform init --upgrade

v3.x to v4.xModule from v4.0 introduced the maintenance node pool, which is created unconditionally. If the maintenance node pool was configured using the previous module version, move the supported parameters of the maintenance node pool from the node_pools parameter to the default_node_pool parameter.

Module from v4.0 enabled the cluster_private_nodes_enabled parameter by default. To avoid cluster re-creation, set the cluster_private_nodes_enabled parameter to false. Alternatively, if the cluster_private_nodes_enabled parameter will be set to true, the cluster must be re-created using terraform destroy --target module.<module-name> and terraform apply --target module.<module-name>

Module from v4.0 has changed the node pool property name by adding a random suffix. For the changes to take effect node pools must be redeployed.

Replace the <module-name> with the name, assigned to the name of module resource, that utilizes this module in the following command and execute it to replace the node pools:

bashterraform apply $(tf state list | grep module.<module-name>.google_container_node_pool | xargs -I{} echo "-replace={} " | sed -E 's/\[/["/g; s/\]/"]/g')

If the cloud.google.com/gke-nodepool label was used in the application configuration, it has to be replaced with <name_prefix>/node-pool-name

For example, if the application pod used the following configuration on the cluster with name_prefix = production:

bashnodeSelector:

cloud.google.com/gke-nodepool: application

It should be replaced with the following configuration, to ensure that the pods will be scheduled both before and after the module version migration:

bashnodeSelector:

production/node-pool-name: application

v4.1.x to v4.2.xThe module from v4.2 has changed the minimal supported Kubernetes version to 1.32. You can skip this chapter if you already use K8s version 1.32 or higher.

The cluster version upgrade itself should pass without downtime. Since it also depends on the apps and services hosted on the cluster, to make sure the cluster version upgrade will pass well, please align with the documentation:

* AWS K8s cluster upgrade

* K8s Deprecated API Migration Guide

* K8s deprecation policy

The upgrade should roll one version at a time: from v1.29 to v1.30, then from v1.30 to v1.31, and then from v1.31 to v1.32, and so on.

v4.x to v5.xThe module from v5.0 enables security features by default, which requires node pools recreation if these features were disabled. If you already have secure boot, secrets encryption, project SSH keys blocking features enabled, you can skip this chapter.

The cluster upgrade itself should pass without downtime. Node pools are recreated according to surge upgrades strategy.

The module from v5.0 has changed the minimal supported google and google-beta providers versions to 6.27. You can skip this chapter if you already use google and google-beta providers version 6.27 or higher.

Upgrade project dependencies:

bashterraform init --upgrade

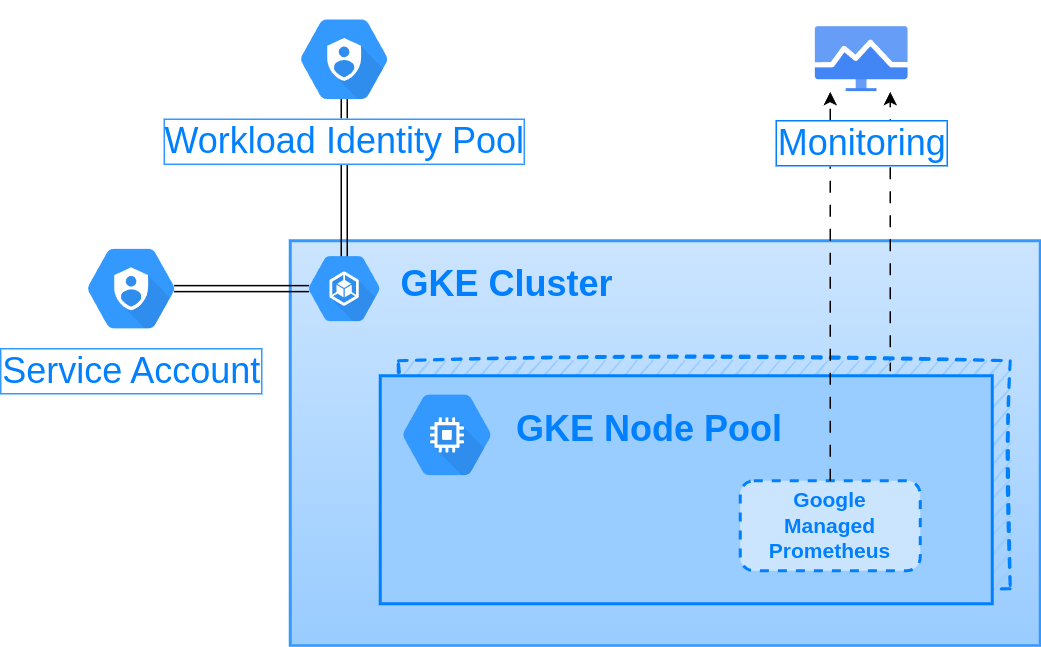

Creates a GKE cluster with workload identity enabled, a default node pool with the custom node_size, and a second node pool with autoscaling and workload identity pool enabled:

hclmodule "gke" {

source = "solutions.corewide.com/google-cloud/tf-gcp-k8s-gke/google"

version = "~> 5.1"

name_prefix = "foo"

vpc = google_compute_network.main.self_link

release_channel = "STABLE"

workload_identity_enabled = true

create_workload_identity_pool = true

secrets_encryption = {

key_id = google_kms_crypto_key.gke.id

}

node_pools = [

{

name = "application"

min_size = 2

max_size = 5

preemptible = true

image = "cos_containerd"

tags = ["application"]

},

]

}

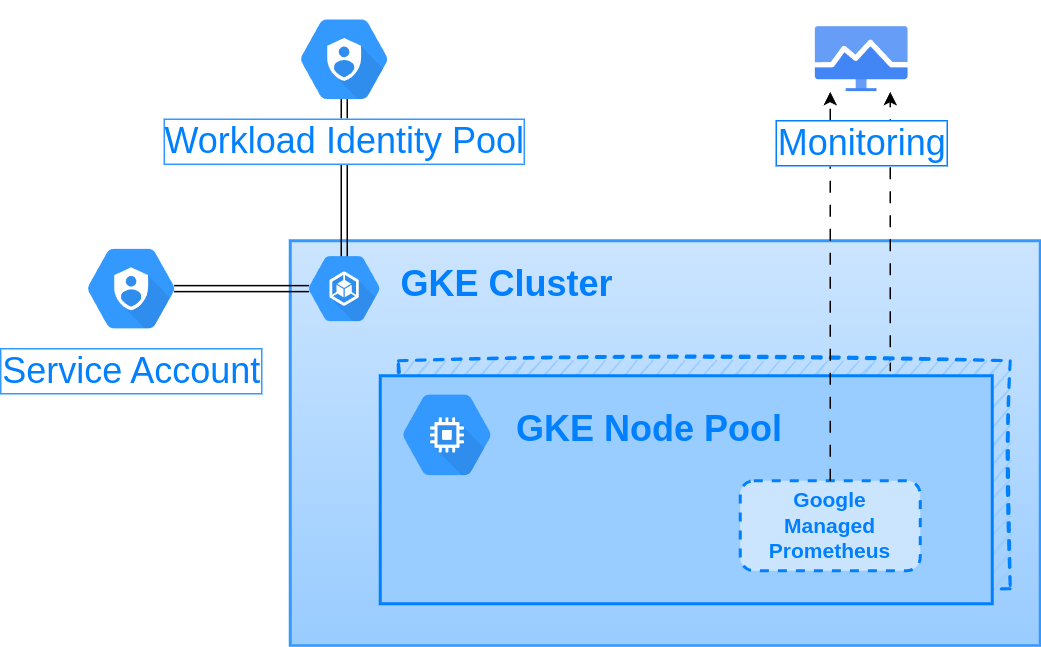

Creates a GKE cluster with IP aliasing enabled, restricted connection to the cluster API, disabled deletion protection, observability configured, and a custom cluster pod IPs:

hclmodule "gke" {

source = "solutions.corewide.com/google-cloud/tf-gcp-k8s-gke/google"

version = "~> 5.1"

name_prefix = "foo"

vpc = google_compute_network.main.self_link

release_channel = "STABLE"

gateway_api_config_channel = "CHANNEL_STANDARD"

deletion_protection_enabled = false

vpc_native = true

cluster_ipv4_cidr_block = "10.100.0.0/20"

allowed_mgmt_networks = {

office = "104.22.0.0/24"

}

secrets_encryption = {

key_id = google_kms_crypto_key.gke.id

}

default_node_pool = {

node_size = "e2-standard-4"

}

node_pools = [

{

name = "application"

min_size = 2

max_size = 5

preemptible = true

tags = [

"application",

]

},

]

observability = {

enabled = true

managed_prometheus_enabled = true

components = [

"SYSTEM_COMPONENTS",

"APISERVER",

"SCHEDULER",

"CONTROLLER_MANAGER",

"STORAGE",

"HPA",

"DEPLOYMENT",

]

}

}

Creates a GKE cluster with a custom maintenance window from 11:00 PM to 5:00 AM UTC, Weekly on Friday and Saturday, and exceptions to the maintenance window are set:

hclmodule "gke" {

source = "solutions.corewide.com/google-cloud/tf-gcp-k8s-gke/google"

version = "~> 5.1"

name_prefix = "foo"

vpc = google_compute_network.main.self_link

release_channel = "STABLE"

workload_identity_enabled = true

create_workload_identity_pool = true

auto_upgrade = true

maintenance_window = {

start_time = "2006-01-02T23:00:00Z"

end_time = "2006-01-03T05:00:00Z"

days = [

"Fr",

"Sa",

]

}

maintenance_exclusion = [

{

name = "foo"

start_time = "2025-12-20T20:00:00Z"

end_time = "2025-12-25T05:00:00Z"

scope = "NO_UPGRADES"

},

{

name = "bar"

start_time = "2025-12-31T12:00:00Z"

end_time = "2026-01-01T05:00:00Z"

scope = "NO_MINOR_UPGRADES"

},

]

secrets_encryption = {

key_id = google_kms_crypto_key.gke.id

}

node_pools = [

{

name = "application"

image = "cos_containerd"

min_size = 2

max_size = 5

preemptible = true

tags = ["application"]

},

]

}

Creates a GKE cluster with Network Policies and binary authorization enabled in addition to advanced security features:

hclmodule "gke" {

source = "solutions.corewide.com/google-cloud/tf-gcp-k8s-gke/google"

version = "~> 5.1"

name_prefix = "foo"

vpc = google_compute_network.main.self_link

release_channel = "STABLE"

binary_auth_enabled = true

secrets_encryption = {

key_id = google_kms_crypto_key.main.id

}

}

GKE with one node pool: node pool is created unconditionally under maintenance pool name. The parameters like the node size can be customized using the default_node_pool variable:

hclmodule "gke" {

source = "solutions.corewide.com/google-cloud/tf-gcp-k8s-gke/google"

version = "~> 5.1"

name_prefix = "foo"

vpc = google_compute_network.main.self_link

release_channel = "STABLE"

secrets_encryption = {

key_id = google_kms_crypto_key.gke.id

}

}

| Variable | Description | Type | Default | Required | Sensitive |

|---|---|---|---|---|---|

cluster_ipv4_cidr_block |

The IP address range for the cluster pod IPs. Set to blank to have a range chosen with the default size(random /14 network) | string |

yes | no | |

name_prefix |

Name prefix for Google service account and GKE cluster | string |

yes | no | |

vpc |

VPC network self_link which will be attached to the Kubernetes cluster | string |

yes | no | |

allowed_mgmt_networks |

Map of CIDR blocks allowed to connect to cluster API | map(string) |

no | no | |

auto_upgrade |

Enables GKE cluster auto-upgrade. Can be disabled only if release_channel is UNSPECIFIED |

bool |

true |

no | no |

binary_auth_enabled |

Whether to enable binary authorization for GKE cluster | bool |

false |

no | no |

block_project_ssh_keys |

Whether to prevent nodes from accepting SSH keys stored in project metadata | bool |

true |

no | no |

cluster_master_cidr |

CIDR block to be used for control plane components | string |

172.16.0.0/28 |

no | no |

cluster_private_nodes_enabled |

Indicates whether cluster private nodes should be enabled. Must be set to true to have an option to disable control plane public endpoint |

bool |

true |

no | no |

cluster_version |

Kubernetes version (Major.Minor) |

string |

1.32 |

no | no |

create_workload_identity_pool |

Indicates whether to create a GKE workload identity pool or use the existing one (one pool per project) | bool |

true |

no | no |

default_node_pool |

Configuration of the maintenance node pool, that is created unconditionally |

object |

{} |

no | no |

default_node_pool.disk_size |

Disk size of a node | number |

20 |

no | no |

default_node_pool.max_size |

Maximum number of nodes in the pool | number |

no | no | |

default_node_pool.min_size |

Minimum number of nodes in the pool | number |

1 |

no | no |

default_node_pool.node_size |

Instance type to use for node creation | string |

e2-standard-2 |

no | no |

deletion_protection_enabled |

Prevent cluster deletion by Terraform | bool |

true |

no | no |

dns_endpoint_enabled |

Indicates whether control plane DNS endpoint is enabled. Can be used to access a private cluster control plane if the public endpoint is disabled | bool |

false |

no | no |

gateway_api_config_channel |

Configuration options for the Gateway API config feature | string |

CHANNEL_DISABLED |

no | no |

maintenance_exclusion |

Exceptions to the maintenance window. Non-emergency maintenance should not occur in these windows | list(object) |

no | no | |

maintenance_exclusion[*].end_time |

Maintenance exclusion interval window end date/time in UTC (RFC3339 Zulu) datetime format, e.g. 2025-09-02T02:00:00Z |

string |

2026-01-02T10:00:00Z |

no | no |

maintenance_exclusion[*].name |

Defines the name of the maintenance exclusion interval | string |

Maintenance Exclusion |

no | no |

maintenance_exclusion[*].scope |

The scope of automatic upgrades to restrict in the exclusion window. Possible values are: NO_UPGRADES, NO_MINOR_UPGRADES, NO_MINOR_OR_NODE_UPGRADES |

string |

NO_MINOR_UPGRADES |

no | no |

maintenance_exclusion[*].start_time |

Maintenance exclusion interval window start date/time in UTC (RFC3339 Zulu YYYY-MM-DDThh:mm:ssZ) datetime format, e.g. 2025-09-01T22:00:00Z |

string |

2025-12-31T12:00:00Z |

no | no |

maintenance_window |

GKE maintenance window parameters. In sum, the maintenance window must be at least 48h a month | object |

no | no | |

maintenance_window.days |

List of weekdays in 2-letter format, on which the maintenance window will be applied. Possible values are: Mo, Tu, We, Th, Fr, Sa, Su |

list(string) |

['Fr', 'Sa'] |

no | no |

maintenance_window.end_time |

Maintenance window end date/time in UTC (RFC3339 Zulu) datetime format, e.g. 2025-09-02T02:00:00Z. Duration = end_time - start_time. If the window crosses midnight, set the date of end_time to the next day (or further) to show the actual duration |

string |

2006-01-02T06:00:00Z |

no | no |

maintenance_window.start_time |

Maintenance window start date/time in UTC (RFC3339 Zulu YYYY-MM-DDThh:mm:ssZ) datetime format, e.g. 2025-09-01T22:00:00Z. The date acts only as an anchor; the time of day defines when the window starts on each recurrence |

string |

2006-01-02T00:00:00Z |

no | no |

maintenance_window.weekly_recurrence |

Defines whether the maintenance window repeats weekly (set true) or daily (set false) |

bool |

true |

no | no |

network_policies_enabled |

Whether network policies support is enabled in the cluster | bool |

true |

no | no |

node_pools |

List of node pools to create | list(object) |

[] |

no | no |

node_pools[*].disk_size |

Disk size of a node | number |

20 |

no | no |

node_pools[*].image |

Image type of node pools | string |

COS_CONTAINERD |

no | no |

node_pools[*].max_size |

Maximum number of nodes in the pool | number |

no | no | |

node_pools[*].min_size |

Minimum number of nodes in the pool | number |

1 |

no | no |

node_pools[*].name |

Name of the node pool | string |

yes | no | |

node_pools[*].node_size |

Instance type to use for node creation | string |

e2-standard-2 |

no | no |

node_pools[*].preemptible |

Whether the nodes should be preemptible | bool |

false |

no | no |

node_pools[*].tags |

The list of instance tags to identify valid sources or targets for network firewalls (When is not set, the default rule set is applied) | list(string) |

[] |

no | no |

observability |

Cluster observability configuration | object |

{} |

no | no |

observability.components |

List of Kubernetes components exposing metrics to monitor | list(string) |

['SYSTEM_COMPONENTS', 'APISERVER', 'SCHEDULER', 'CONTROLLER_MANAGER', 'STORAGE', 'HPA', 'POD', 'DAEMONSET', 'DEPLOYMENT', 'STATEFULSET', 'KUBELET', 'CADVISOR', 'DCGM'] |

no | no |

observability.enabled |

Indicates whether cluster observability is enabled | bool |

false |

no | no |

observability.managed_prometheus_enabled |

Indicates whether Google Cloud Managed Service for Prometheus (GMP) should be deployed | bool |

true |

no | no |

public_endpoint_enabled |

Indicates whether control plane public endpoint is enabled. Can be disabled only if var.cluster_private_nodes_enabled is set to true |

bool |

true |

no | no |

region |

Specific zone which exists within the region or a single region | string |

no | no | |

release_channel |

Configuration options for the Release channel feature | string |

UNSPECIFIED |

no | no |

secrets_encryption |

Secrets encryption at the application level configuration | object |

{} |

no | no |

secrets_encryption.enabled |

Indicates whether secrets encryption is enabled | bool |

true |

no | no |

secrets_encryption.key_id |

Cloud KMS key ID to use for the secrets encryption in etcd | string |

no | no | |

secure_boot_enabled |

Whether to enable secure boot feature for GKE nodes | bool |

true |

no | no |

shielded_nodes_enabled |

Whether to enable shielded GKE nodes feature | bool |

true |

no | no |

subnet_id |

The name or self_link of the Google Compute Engine subnetwork in which the cluster's instances are launched | string |

no | no | |

vpc_native |

Indicates whether IP alliasing should be enabled | bool |

true |

no | no |

workload_identity_enabled |

Indicates whether workload identity is enabled and whether nodes should store their metadata on the GKE metadata server | bool |

false |

no | no |

| Output | Description | Type | Sensitive |

|---|---|---|---|

cluster |

GKE cluster resource | resource |

no |

node_pools |

List of created node pools | resource |

no |

workload_identity_pool |

GKE workload identity pool data | computed |

no |

| Dependency | Version | Kind |

|---|---|---|

terraform |

>= 1.3 |

CLI |

hashicorp/google |

~> 6.27 |

provider |

hashicorp/google-beta |

~> 6.27 |

provider |

PodMonitoring CRD documentation