We value your privacy

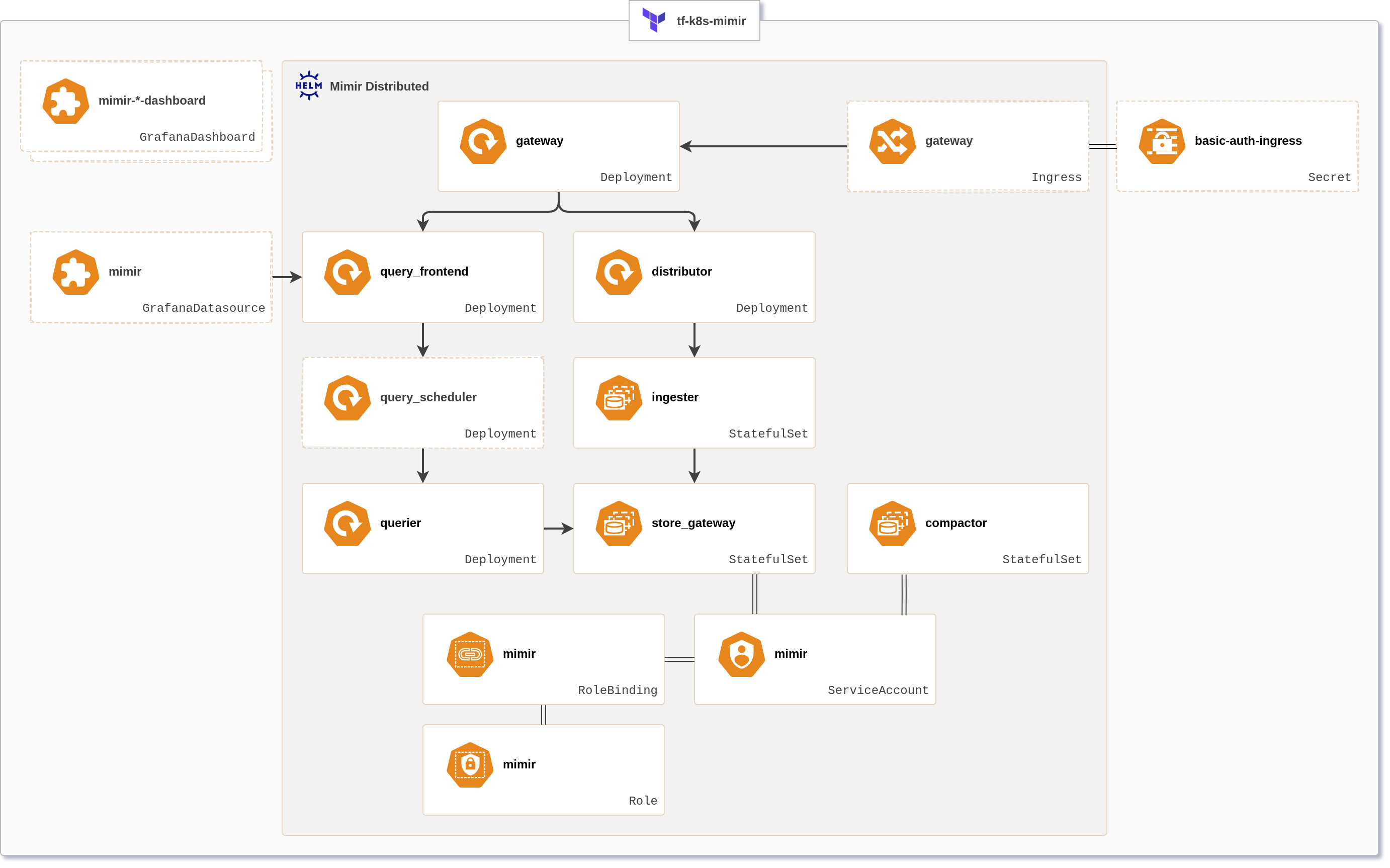

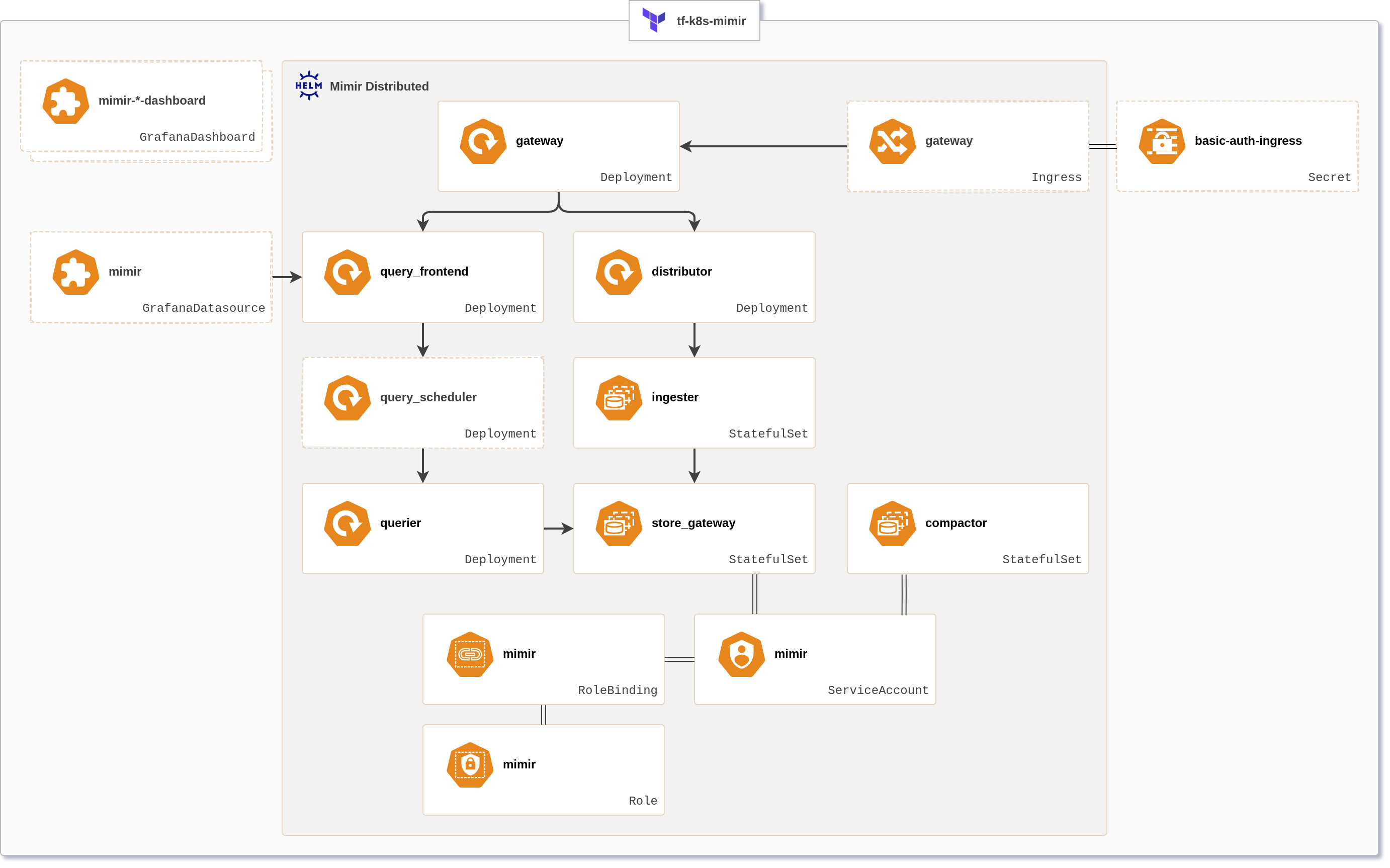

Helm-based setup of Grafana Mimir with Terraform, flexible and highly configurable, using cloud object storage for storing metrics long term. The resulting Mimir deployment is ready to be used as metrics write target for Prometheus or Grafana Alloy.

For cloud-specific implementations, the module does not support passing credentials to object storage directly - instead, it uses RBAC of a corresponding cloud provider. This somewhat limits the module usage: GCS can only be configured with GKE, Azure Blob Storage with AKS - S3, however, remains an exception to support non-AWS S3 implementations like DigitalOcean.

Supported features:

Mimir stores incoming metrics and WAL in short-term storage (Kubernetes volumes), then transfers them to object storage at regular intervals (every two hours). Should volume data be destroyed, it leads to losing metrics for only this period.

Memory limit for all Mimir components is set as a global parameter, then proportionally distributed between the enabled components. Make sure to increase this value as you enable HA and configure scaling. The default setup restricts the stack to 4GB of RAM which should be taken into consideration for a minimal deployment.

Once you have a Corewide Solutions Portal account, this one-time action will use your browser session to retrieve credentials:

shellterraform login solutions.corewide.com

Initialize mandatory providers:

Copy and paste into your Terraform configuration and insert the variables:

hclmodule "tf_k8s_mimir" {

source = "solutions.corewide.com/kubernetes/tf-k8s-mimir/helm"

version = "~> 1.2.0"

# specify module inputs here or try one of the examples below

...

}

Initialize the setup:

shellterraform init

Corewide DevOps team strictly follows Semantic Versioning

Specification

to

provide our clients with products that have predictable upgrades between versions. We

recommend

pinning

patch versions of our modules using pessimistic

constraint operator (~>) to prevent breaking changes during upgrades.

To get new features during the upgrades (without breaking compatibility), use

~> 1.2 and run

terraform init -upgrade

For the safest setup, use strict pinning with version = "1.2.0"

| tf-k8s-crd | $50 |

Helm-based setup of Grafana Mimir with Terraform, flexible and highly configurable, using cloud object storage for storing metrics long term. The resulting Mimir deployment is ready to be used as metrics write target for Prometheus or Grafana Alloy.

For cloud-specific implementations, the module does not support passing credentials to object storage directly - instead, it uses RBAC of a corresponding cloud provider. This somewhat limits the module usage: GCS can only be configured with GKE, Azure Blob Storage with AKS - S3, however, remains an exception to support non-AWS S3 implementations like DigitalOcean.

Supported features:

Mimir stores incoming metrics and WAL in short-term storage (Kubernetes volumes), then transfers them to object storage at regular intervals (every two hours). Should volume data be destroyed, it leads to losing metrics for only this period.

Memory limit for all Mimir components is set as a global parameter, then proportionally distributed between the enabled components. Make sure to increase this value as you enable HA and configure scaling. The default setup restricts the stack to 4GB of RAM which should be taken into consideration for a minimal deployment.

All notable changes to this project are documented here.

The format is based on Keep a Changelog, and this project adheres to Semantic Versioning.

helm_timeout variable to customize the time for Helm release to be installed in the Kuberneteslimits.max_outstanding_requests_per_tenant variableInitial version with support for all key features of Mimir and partial support for cloud-specific configuration.

Cloud-level infra prerequisites:

tf-aws-k8s-gke module with OIDC enabledmonitoring namespace created hcllocals {

mimir_namespace = "monitoring"

mimir_service_acc_name = "mimir"

}

module "gke" {

source = "solutions.corewide.com/google-cloud/tf-gcp-k8s-gke/google"

version = "~> 4.0"

# ...

}

resource "kubernetes_namespace_v1" "monitoring" {

metadata {

name = local.mimir_namespace

}

}

resource "google_service_account" "mimir" {

account_id = "mimir"

display_name = "Service Account for connecting Mimir to GCS"

project = var.project_id

}

resource "google_project_iam_member" "mimir" {

for_each = toset([

"roles/iam.serviceAccountTokenCreator",

"roles/storage.objectCreator",

])

project = var.project_id

role = each.key

member = "serviceAccount:${google_service_account.mimir.email}"

}

# Allow GCP SA to be used by K8s SA

resource "google_service_account_iam_member" "mimir" {

service_account_id = google_service_account.mimir.name

role = "roles/iam.workloadIdentityUser"

member = "serviceAccount:${var.project_id}.svc.id.goog[${local.mimir_namespace}/${local.mimir_service_acc_name}]"

}

resource "google_storage_bucket" "mimir" {

name = "mimir"

location = "EU"

force_destroy = true

lifecycle_rule {

condition {

age = 3

}

action {

type = "Delete"

}

}

}

Minimal setup with only mandatory parameters:

hclmodule "mimir" {

source = "solutions.corewide.com/kubernetes/tf-k8s-mimir/helm"

version = "~> 1.2"

name_prefix = "foo"

namespace = local.mimir_namespace

object_storage = {

type = "gcs"

blocks_bucket_name = google_storage_bucket.mimir.name

}

rbac = {

cloud_provider = "gcp"

service_account_name = local.mimir_service_acc_name

gcp_service_account_email = google_service_account.mimir.email

}

node_selector = {

"cloud.google.com/gke-nodepool" = module.gke.node_pools.maintenance.name

}

}

Highly available setup with custom Helm timeout:

hcldata "google_compute_zones" "regional_available" {

project = var.project_id

region = var.region

}

module "mimir" {

source = "solutions.corewide.com/kubernetes/tf-k8s-mimir/helm"

version = "~> 1.2"

name_prefix = "foo"

namespace = local.mimir_namespace

helm_timeout = 360

object_storage = {

type = "gcs"

blocks_bucket_name = google_storage_bucket.mimir.name

}

rbac = {

cloud_provider = "gcp"

service_account_name = local.mimir_service_acc_name

gcp_service_account_email = google_service_account.mimir.email

}

high_availability = {

enabled = true

zones = data.google_compute_zones.regional_available.names

}

node_selector = {

"cloud.google.com/gke-nodepool" = module.gke.node_pools.maintenance.name

}

}

Isolated Mimir setup as a remote write target for Prometheus:

hclmodule "mimir" {

source = "solutions.corewide.com/kubernetes/tf-k8s-mimir/helm"

version = "~> 1.2"

name_prefix = "foo"

namespace = local.mimir_namespace

object_storage = {

type = "gcs"

blocks_bucket_name = google_storage_bucket.mimir.name

}

rbac = {

cloud_provider = "gcp"

service_account_name = local.mimir_service_acc_name

gcp_service_account_email = google_service_account.mimir.email

}

external_endpoint = {

domain_name = "mimir.example.com"

basic_auth_enabled = true

}

limits = { memory_global_gb = 8 }

node_selector = {

"cloud.google.com/gke-nodepool" = module.gke.node_pools.maintenance.name

}

}

Cloud-level infra prerequisites:

* EKS tf-aws-k8s-eks module with OIDC enabled

* AWS RBAC configured via tf-aws-iam-role module

* S3 bucket deployed with tf-aws-s3-bucket module

* Kubernetes monitoring namespace created

hcllocals {

mimir_namespace = "monitoring"

mimir_service_acc_name = "mimir"

}

data "aws_region" "current" {}

module "eks" {

source = "solutions.corewide.com/aws/tf-aws-k8s-eks/aws"

version = "~> 5.2"

# ...

}

resource "kubernetes_namespace_v1" "monitoring" {

metadata {

name = local.mimir_namespace

}

}

module "s3" {

source = "solutions.corewide.com/aws/tf-aws-s3-bucket/aws"

version = "~> 1.0"

# ...

}

module "mimir_iam" {

source = "solutions.corewide.com/aws/tf-aws-iam-role/aws"

version = "~> 1.1"

name = "AWSS3ManagementFromEKS"

assume_with_web_identity = true

oidc_provider_url = module.eks.eks_identity_provider.url

existing_policy_names = [

"AmazonS3FullAccess",

]

service_accounts = [

{

name = local.mimir_service_account_name

namespace = local.mimir_namespace

},

]

}

Minimal setup with only mandatory parameters:

hclmodule "mimir" {

source = "solutions.corewide.com/kubernetes/tf-k8s-mimir/helm"

version = "~> 1.2"

name_prefix = "foo"

namespace = local.mimir_namespace

object_storage = {

type = "s3"

s3_region = data.aws_region.current.name

blocks_bucket_name = module.s3.bucket.bucket

}

rbac = {

cloud_provider = "aws"

aws_iam_role_arn = aws_iam_role.mimir.arn

service_account_name = local.mimir_service_account_name

}

node_selector = {

"eks.amazonaws.com/nodegroup" = "maintenance"

}

depends_on = [

module.eks,

]

}

Highly available setup with custom Helm timeout:

hcldata "aws_availability_zones" "regional_available" {

state = "available"

filter {

name = "region-name"

values = [data.aws_region.current.name]

}

}

module "mimir" {

source = "solutions.corewide.com/kubernetes/tf-k8s-mimir/helm"

version = "~> 1.2"

name_prefix = "foo"

namespace = local.mimir_namespace

helm_timeout = 360

object_storage = {

type = "s3"

s3_region = data.aws_region.current.name

blocks_bucket_name = module.s3.bucket.bucket

}

rbac = {

cloud_provider = "aws"

aws_iam_role_arn = aws_iam_role.mimir.arn

service_account_name = local.mimir_service_account_name

}

high_availability = {

enabled = true

zones = data.aws_availability_zones.regional_available.names

}

node_selector = {

"eks.amazonaws.com/nodegroup" = "maintenance"

}

depends_on = [

module.eks,

]

}

Isolated Mimir setup as a remote write target for Prometheus:

hclmodule "mimir" {

source = "solutions.corewide.com/kubernetes/tf-k8s-mimir/helm"

version = "~> 1.2"

name_prefix = "foo"

namespace = local.mimir_namespace

grafana = {

create_datasource = false

create_dashboards = false

}

object_storage = {

type = "s3"

s3_region = data.aws_region.current.name

blocks_bucket_name = module.s3.bucket.bucket

}

rbac = {

cloud_provider = "aws"

aws_iam_role_arn = aws_iam_role.mimir.arn

service_account_name = local.mimir_service_account_name

}

external_endpoint = {

domain_name = "mimir.example.com"

basic_auth_enabled = true

}

limits = { memory_global_gb = 8 }

node_selector = {

"eks.amazonaws.com/nodegroup" = "maintenance"

}

}

| Variable | Description | Type | Default | Required | Sensitive |

|---|---|---|---|---|---|

app_version |

Mimir version to deploy (image tag). If not set, the chart uses its corresponding default | string |

yes | no | |

name_prefix |

Name prefix for all resources of the module | string |

yes | no | |

namespace |

Namespace to deploy Mimir into | string |

yes | no | |

object_storage |

Object storage parameters block | object |

yes | no | |

rbac |

Cloud-specific RBAC settings to access object storage | object |

yes | no | |

chart_version |

Mimir Helm chart version to deploy | string |

5.2.0 |

no | no |

components_scaling |

Number of replicas of Mimir components (per zone). 0 disables the component (if possible) |

object |

{} |

no | no |

components_scaling.chunks_cache |

Number of Memcache replicas for chunks | number |

0 |

no | no |

components_scaling.compactor |

Number of compactor replicas |

number |

1 |

no | no |

components_scaling.distributor |

Number of distributor replicas |

number |

1 |

no | no |

components_scaling.gateway |

Number of gateway replicas |

number |

1 |

no | no |

components_scaling.index_cache |

Number of Memcache replicas for indexes | number |

0 |

no | no |

components_scaling.ingester |

Number of ingester replicas (per zone) |

number |

1 |

no | no |

components_scaling.metadata_cache |

Number of Memcache replicas for metadata | number |

0 |

no | no |

components_scaling.querier |

Number of querier replicas |

number |

1 |

no | no |

components_scaling.query_frontend |

Number of query_frontend replicas |

number |

1 |

no | no |

components_scaling.results_cache |

Number of Memcache replicas for query results | number |

0 |

no | no |

components_scaling.store_gateway |

Number of store_gateway replicas (per zone) |

number |

1 |

no | no |

components_scaling.write_proxy |

Number of write proxies | number |

2 |

no | no |

external_endpoint |

Settings to enable external access to Mimir | object |

{} |

no | yes |

external_endpoint.basic_auth_enabled |

Whether to protect external endpoint with basic HTTP authentication. Only works with Ingress Nginx controller | bool |

false |

no | yes |

external_endpoint.basic_auth_password |

Password for basic HTTP authentication. Generated randomly if not specified | string |

no | yes | |

external_endpoint.basic_auth_username |

Username for basic HTTP authentication | string |

mimir |

no | yes |

external_endpoint.cert_issuer_name |

Name of CertManager ClusterIssuer resource to generate certificates with |

string |

letsencrypt |

no | yes |

external_endpoint.domain_name |

Domain name to make Mimir externally accessible at | string |

no | yes | |

external_endpoint.ingress_annotations |

K8s annotations for the specified Ingress Class | map(any) |

{} |

no | yes |

external_endpoint.ingress_class |

Ingress Class name to target a specific Ingress controller | string |

nginx |

no | yes |

grafana |

Grafana integration settings. CRDs from Grafana Operator must be preinstalled | object |

{} |

no | no |

grafana.create_dashboards |

Whether to create a ConfigMap with Grafana dashboards for Mimir's own metrics. Also creates GrafanaDashboard CRs for Grafana to discover them. _Note: metrics from Mimir components must be scraped separately by Grafana Alloy |

bool |

false |

no | no |

grafana.create_datasource |

Whether to create a Grafana Operator compatible datasource to access Mimir. Grafana Operator must be preinstalled for CRDs to exist in the cluster | bool |

false |

no | no |

grafana.datasource_name |

Name for Mimir datasource visible in Grafana UI | string |

no | no | |

grafana.instance_selector |

Selector to use for Grafana CRs to be detected by Grafana Operator | object |

no | no | |

grafana.instance_selector.instance_name |

The only mandatory Grafana instance label to match | string |

grafana |

no | no |

grafana.polling_interval_seconds |

Default time interval for Mimir datasource, seconds | number |

30 |

no | no |

helm_timeout |

Time in seconds for Helm resource to install in Kubernetes | number |

150 |

no | no |

high_availability |

HA settings for zone-aware replication | object |

{} |

no | no |

high_availability.enabled |

Enable HA for zone-aware components: ingester, compactor, store_gateway |

bool |

false |

no | no |

high_availability.zones |

List of zones to distribute the replicas between. If HA is enabled, must have at least three zones | list(string) |

[] |

no | no |

high_availability.zones_node_selector_key |

Label selector key for cluster nodes to detect zone they belong to | string |

topology.kubernetes.io/zone |

no | no |

in_cluster_storage |

Parameters of in-cluster persistence (used for storing data before sending it to object storage) | object |

{} |

no | no |

in_cluster_storage.compactor_volume_size_gb |

Size of Kubernetes volume to store local data of compactor, GB |

number |

10 |

no | no |

in_cluster_storage.ingester_volume_size_gb |

Size of Kubernetes volume to store local data of ingester, GB |

number |

20 |

no | no |

in_cluster_storage.storage_access_mode |

Storage access mode for the short term storage | string |

ReadWriteOnce |

no | no |

in_cluster_storage.storage_class_name |

Name of the Kubernetes storage class to use for short-term metrics storage of ingester, compactor and store_gateway. If unset, uses the default storage class of the cluster |

string |

no | no | |

in_cluster_storage.store_gateway_volume_size_gb |

Size of Kubernetes volume to store local data of store_gateway, GB |

number |

5 |

no | no |

limits |

Limits configuration block | object |

{} |

no | no |

limits.ingestion_burst_size |

Allowed ingestion burst size, number of samples | number |

400000 |

no | no |

limits.ingestion_rate |

Ingestion rate limit, samples per second | number |

40000 |

no | no |

limits.max_global_series_per_user |

The maximum number of in-memory series across the cluster before replication. 0 to disable |

number |

1000000 |

no | no |

limits.max_label_names_per_series |

The maximum number of labels per series | number |

50 |

no | no |

limits.max_outstanding_requests_per_tenant |

Allowed number of outstanding requests per tenant | number |

800 |

no | no |

limits.memory_global_gb |

Global RAM limit for all Mimir components together, GB | number |

4 |

no | no |

limits.out_of_order_time_window |

Ingestion timeout | string |

10m |

no | no |

limits.request_burst_size |

Allowed push request burst size. 0 to disable |

number |

0 |

no | no |

limits.request_rate |

Push request rate limit, requests per second. 0 to disable |

number |

0 |

no | no |

log_level |

Logging level for Mimir components | string |

info |

no | no |

node_selector |

Node selector for Mimir components. Ignored by zone-aware components when HA is enabled | map(string) |

{} |

no | no |

object_storage.azure_account_name |

Azure account name to use for object storage connections. Required if type is set to azure |

string |

no | no | |

object_storage.azure_endpoint_suffix |

Endpoint suffix to use for Azure Blob Storage connections. Required if type is set to azure |

string |

no | no | |

object_storage.blocks_bucket_name |

Name of the bucket to use for TSDB blocks | string |

yes | no | |

object_storage.blocks_retention_period_days |

How long should the metrics be available in object storage | number |

93 |

no | no |

object_storage.s3_advanced_settings |

Extra parameters for S3 connection: encryption, explicit credentials, etc. (see official documentation for full list). Only works with type set to s3 |

map(any) |

{} |

no | no |

object_storage.s3_endpoint |

S3 endpoint to connect to buckets data. Required if type is set to s3 |

string |

no | no | |

object_storage.s3_region |

Region to use for S3 requests. Required if type is set to s3 |

string |

no | no | |

object_storage.type |

Type of object storage to use. Supported values: s3, gcs, azure |

string |

yes | no | |

rbac.aws_iam_role_arn |

ARN of AWS IAM role. This is the role that Service Account will be bound to have privileges to access S3 buckets. Requires cloud_provider set to aws |

string |

no | no | |

rbac.azure_client_id |

Azure client ID with privileges to access Azure Blob Storage. Requires cloud_provider set to azure |

string |

no | no | |

rbac.cloud_provider |

Cloud provider to adapt configuration to. Available values: aws, gcp, azure |

string |

yes | no | |

rbac.gcp_service_account_email |

string |

no | no | ||

rbac.service_account_name |

Name to use for Kubernetes service account created by the module | string |

yes | no | |

tolerations |

K8s tolerations for Mimir components | list(object) |

[] |

no | no |

tolerations[*].effect |

Indicates the taint effect to match | string |

no | no | |

tolerations[*].key |

The taint key that the toleration applies to | string |

no | no | |

tolerations[*].operator |

The operator to check the taint value | string |

no | no | |

tolerations[*].value |

The taint value that the toleration applies to | string |

no | no |

| Output | Description | Type | Sensitive |

|---|---|---|---|

connection_params_external |

Mimir connection parameters (external) | computed |

yes |

connection_params_internal |

Mimir connection parameters (in-cluster) | map |

no |

| Dependency | Version | Kind |

|---|---|---|

terraform |

>= 1.3 |

CLI |

hashicorp/helm |

~> 2.17 |

provider |

hashicorp/kubernetes |

~> 2.36 |

provider |

hashicorp/random |

~> 3.7 |

provider |

tf-k8s-crd |

~> 2.0 |

module |